Statement (Cauchy-Schwarz Inequality):

For any two vectors 𝑢 = ( 𝑢 _1 , 𝑢_ 2 , … , 𝑢_ 𝑛 ) and 𝑣 = ( 𝑣 _1 , 𝑣 _2 , … , 𝑣 _𝑛 ), the Cauchy-Schwarz inequality states:

with equality if and only if the vectors are linearly dependent. That is, one vector is a scalar multiple of another.

This inequality limits the magnitude of the dot product of two vectors, laying the groundwork for numerous mathematical notions such as vector angle and orthogonality.

Proof:

1. Applying the concept of quadratic forms:

The expression f(t) is defined as:

t represents a real parameter.

Expanding f(t) yields:

This simplifies to:

Where

2. Non-negativity of f(t):

As f(t) is a sum of squares, it is always non-negative.

3. Discriminant condition:

For the quadratic equation 𝑓(𝑡)=0, the discriminant must satisfy:

Simplifying, we get:

or equivalently:

Substituting the definitions of 𝑎, 𝑏, and 𝑐 yields:

Substituting the definitions of 𝑎, 𝑏, and 𝑐 yields:

4. Equality condition: Equality occurs when the discriminant Δ=0, which occurs when 𝑢_ 𝑖 and 𝑣_ 𝑖 are linearly dependent, i.e., u=kv for some scalar k.

This completes the proof of the Cauchy-Schwarz inequality.

The Cauchy inequality is a fundamental result in linear algebra and analysis. It states: For vectors u,v ∈R^n or C^n:

where

is the dot product and

is the dot product and  is the Euclidean norm.

is the Euclidean norm.

Example 1: Real numbers

Let u=(1, 2, 3) and v=(4, -1, 2)

The Cauchy-Schwarz inequality is valid when 8 ≤ 294.

Example 3: Continuous Functions

Let f(x)=x and g(x)=1 on the interval [0,1]. The inner product is defined as:

- Verify the inequality.

Since the inequality holds.

the inequality holds.

Why is Cauchy-Schwarz inequality important?

The Cauchy–Schwarz Inequality is fundamental to many mathematical and scientific disciplines. Here are some places where it plays a crucial role:

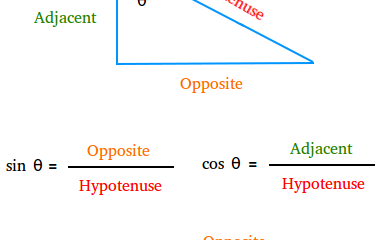

Geometry of Vectors: It allows you to compute the cosine of the angle between two vectors, which leads to the concept of orthogonality.

Linear algebra is essential for demonstrating other inequalities, such as the triangle inequality in normed spaces.

Statistics: The inequality underpins the covariance and correlation measures of random variables.

Optimization is commonly utilized in problem solving and algorithm analysis.

algebra1 month ago

algebra1 month ago

algebra1 month ago

algebra1 month ago

algebra2 months ago

algebra2 months ago

algebra1 month ago

algebra1 month ago

geometry1 month ago

geometry1 month ago

calculus2 months ago

calculus2 months ago

algebra2 months ago

algebra2 months ago

algebra2 months ago

algebra2 months ago